diff --git a/cv/detection/centermask2/pytorch/README.md b/cv/detection/centermask2/pytorch/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..aa8a69c23bf12eaa849aadb33ba88a76d28deb9c

--- /dev/null

+++ b/cv/detection/centermask2/pytorch/README.md

@@ -0,0 +1,69 @@

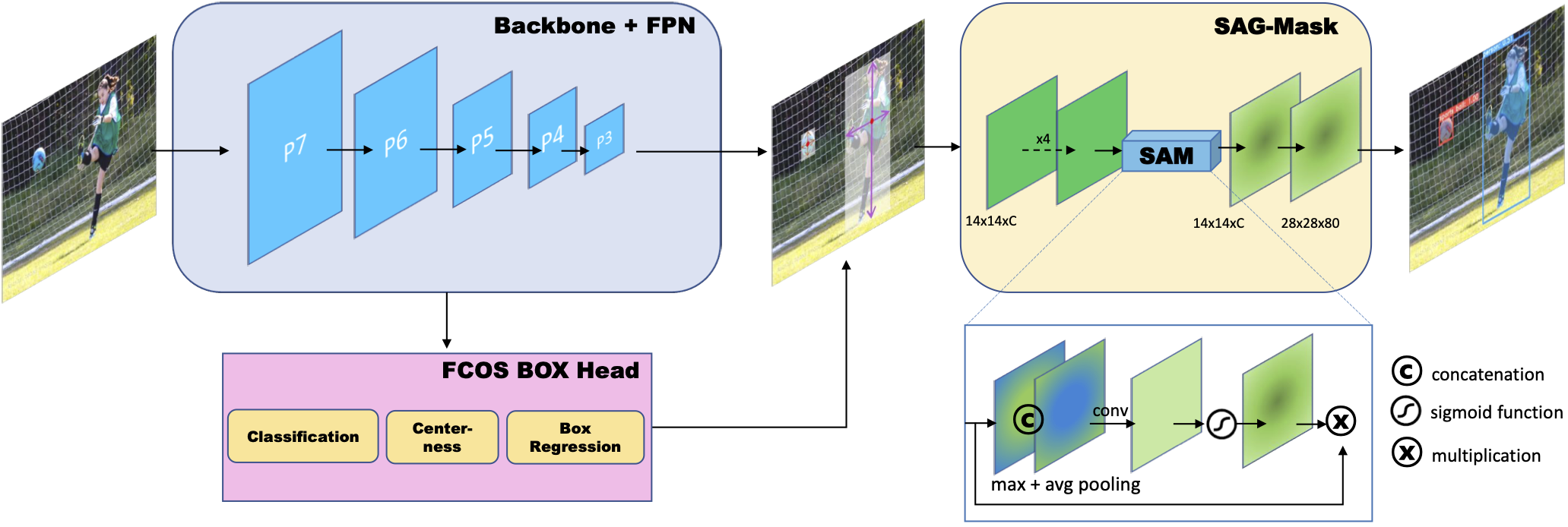

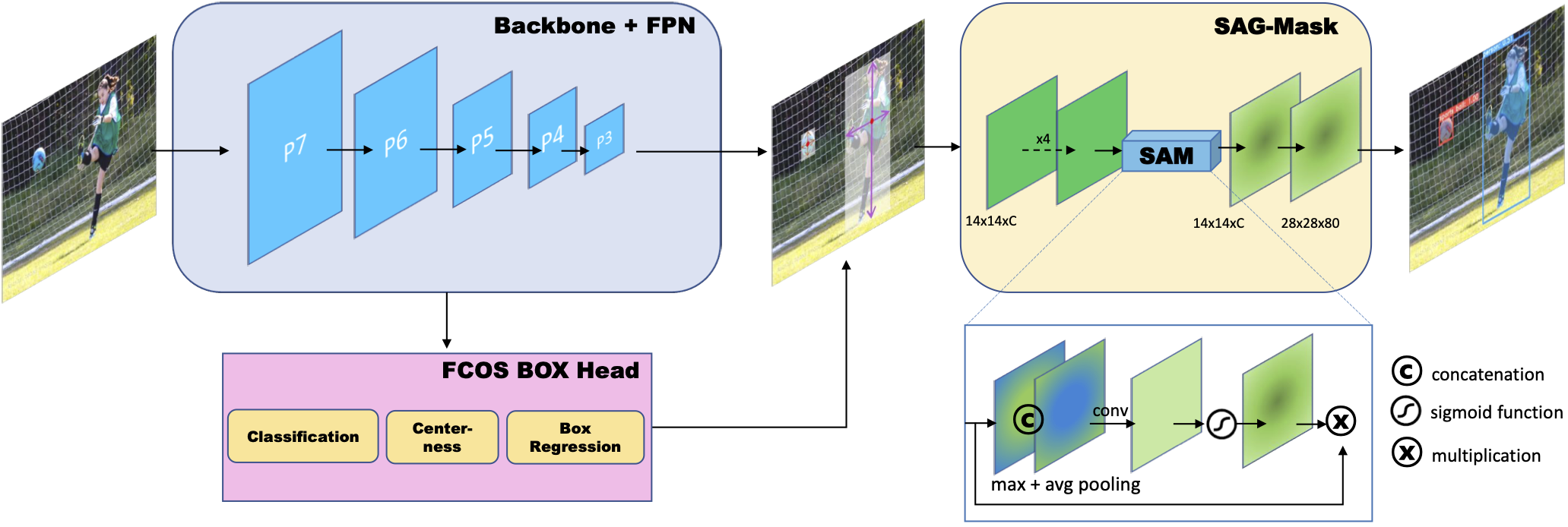

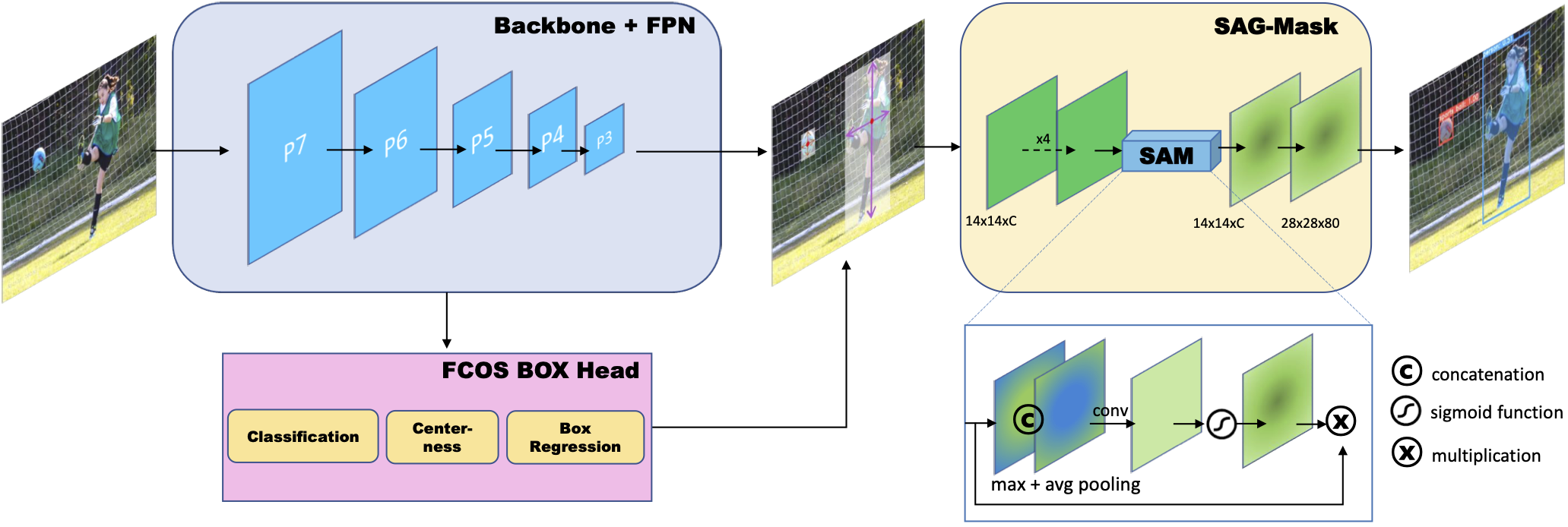

+# [CenterMask](https://arxiv.org/abs/1911.06667)2

+

+[[`CenterMask(original code)`](https://github.com/youngwanLEE/CenterMask)][[`vovnet-detectron2`](https://github.com/youngwanLEE/vovnet-detectron2)][[`arxiv`](https://arxiv.org/abs/1911.06667)] [[`BibTeX`](#CitingCenterMask)]

+

+**CenterMask2** is an upgraded implementation on top of [detectron2](https://github.com/facebookresearch/detectron2) beyond original [CenterMask](https://github.com/youngwanLEE/CenterMask) based on [maskrcnn-benchmark](https://github.com/facebookresearch/maskrcnn-benchmark).

+

+> **[CenterMask : Real-Time Anchor-Free Instance Segmentation](https://arxiv.org/abs/1911.06667) (CVPR 2020)**

+> [Youngwan Lee](https://github.com/youngwanLEE) and Jongyoul Park

+> Electronics and Telecommunications Research Institute (ETRI)

+> pre-print : https://arxiv.org/abs/1911.06667

+

+

+

+

+

+

+ +

+